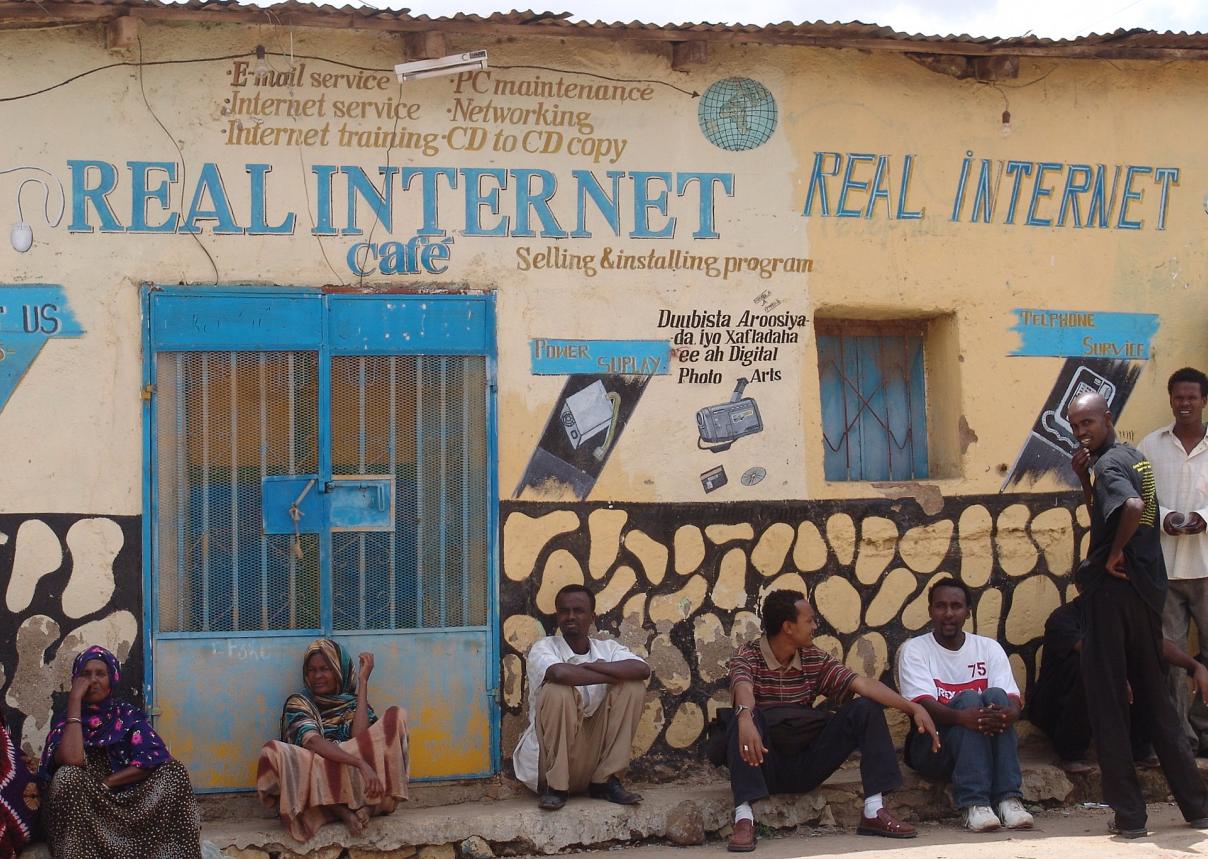

Image by Charles Roffey used under Creative Commons license.Each week David Souter comments on an important issue for APC members and others concerned about the Information Society. This week’s blog post looks at the challenge of measuring the impact of ICT4D.

Image by Charles Roffey used under Creative Commons license.Each week David Souter comments on an important issue for APC members and others concerned about the Information Society. This week’s blog post looks at the challenge of measuring the impact of ICT4D.

It’s tempting to write this week about the impact of the US presidential election on the Internet or on ICT4D (the use of ICTs in development programmes and activities). I’ll resist that, though, and stick to my original plan: to write about the challenge of measuring the impact of ICT4D itself. At the end, I’ll tell you why.

Why should we measure ICT4D?

I’ve talked in previous posts about the gap in understanding of ICT4D between its advocates and the rest of the development community. ICT4D’s advocates tend to talk up what ICTs can do – or could do in favourable circumstances. Mainstream development professionals tend to emphasise the difficulties of development, such as the limited infrastructure and human capacity that make circumstances in-ideal and limit the effectiveness of ICTs.

What we need is more evidence and assessment of the actual impact on people’s lives – of ICTs in general, and ICT4D projects and programmes in particular. And we’ve much more evidence today than we had ten years ago. As the World Bank and others have shown, the big picture that’s revealing isn’t quite so bright as had been hoped. So why’s reality not matching up to expectations?

Measuring the impact of ICTs on development isn’t easy. This week I’ll point to some reasons why it’s challenging – drawing on an investigation I made a while back for the UK Department for International Development (DFID), APC and other agencies. (You can find the longer version in Investigation 4 here.)

What is ICT4D?

When we look at ICTs in development, we’re really looking at two things:

- at the impact which ICTs are having on economies and societies in general;

- and at the impact which individual projects and programmes are having on their target beneficiaries and others.

It’s the latter – specific interventions by governments and other stakeholders – that we generally call ICT4D.

Why is impact assessment different from evaluation?

It’s important to distinguish impact assessment from evaluation. To illustrate, I’ll take an ICT4D example.

Suppose a project aimed to use GPS to help fishing communities in certain villages to increase their catch and earnings. Evaluation would focus on those communities, their fisherfolk, their catches and their earnings.

Impact assessment would go much further and much deeper. It would ask about the effect on other villages as well as those within the project; about the consequences for nutrition; about the impact on fish stocks; about the use to which greater earnings (if achieved) were used; about the distribution of wealth and power within communities; about the impact on gender, education, health; about unintended consequences as well as project goals; about the impact after the project has concluded as well as the impact while it’s going on.

Evaluating projects tells us only part of what is happening because it focuses on project goals and target beneficiaries. If we want the whole picture, we have to look at impact, which can be very different.

Why is impact assessment difficult?

Why is this difficult? I’ll suggest five reasons.

First, because change is complex. ICTs aren’t the only new things that are happening in developmental contexts. It’s hard to attribute outcomes to ICTs or other factors when a lot of change is happening, and many changes interact. And contexts vary hugely – because of politics, because of economic and social structures, because of cultural norms and inequalities, because of geography and demography. Unless we understand the relationship between a project’s impact and its context, we’ll learn little about translating it elsewhere.

Second, because the evidence base we have is weak. Most data sets are poor in developing countries because there’s not the infrastructure to collect them. How can we tell what’s changing if we don’t know where we’re starting from – if we’ve no proper baseline and no real confidence in the numbers that we’re using?

Third, because people gain and lose to different degrees from development interventions. We’ve learned by now that, in many cases, ICTs are likely to benefit the better-off more than those with fewer resources. Outcomes need to be disaggregated, with special attention paid to gender, to power structures and to marginalised groups. If not, the left behind could well be left behind again.

Fourth, because evaluation doesn’t reach out far enough. The biggest impacts might be unintended, unexpected and unmeasured. They might be on groups the project never planned to target. And they might be positive or negative.

Fifth, because assessing impact takes so long. The aim of ICT4D is lasting change. But lasting change can only be measured over longer periods, once a project is over (and is no longer getting special funding). We don’t really know the outcomes until it’s too late to act upon them.

Why’s it even harder to assess the impact of ICT4D?

And there are reasons why it’s even harder to assess impact in ICT4D. Let’s take those points again.

The context for communications is hugely variable and changing very fast. Think of the speed with which people have adopted mobile phones. Few project planners at the time of WSIS expected that to happen. Many projects focused services on telecentres and had to reconfigure as most target beneficiaries bought their own handheld devices.

The evidence base for ICTs is particularly weak (because it changes quickly) and poorly related to other data sets. We know too little about how people are making use of their devices and the services available, and how their use is changing. Too many big decisions in ICT4D are made on the basis of small samples which may not be representative. And next year’s data (when a project is delivered) may be very different from last year’s (when it’s planned).

Evaluation’s focused heavily on intended outcomes and target beneficiaries. But the pace of change means that there are likely to be more unexpected or unanticipated outcomes from projects in ICT4D, that these may be more important than elsewhere, and that they’ll interact more fully with other aspects of people’s lives. Many of the outcomes of new technology result from people experimenting to meet their own needs rather than doing what project planners would like them to do.

And changes in ICTs are so fast that, by the time a project’s run its course, its context’s changed and its technology is out of date. Pilot projects have less value because, by the time they’ve been evaluated, it no longer makes much sense to do them in the way they have been done.

Looking at the bigger picture

This makes it more important to focus on assessing the impact of individual projects in ICT4D – but it also reinforces why project assessment’s insufficient for assessing impact.

It makes it more important to keep track of outcomes from individual projects – particularly unexpected consequences and the distribution of gains and losses between different groups in the community.

It makes it more important, too, that evaluation and impact assessment are done with proper rigour (and, preferably, independence). Too many ICT4D evaluations have been made by those with vested interests, looked for successes that are liked by donors but neglecting failings from which lessons should be learnt. Too few have involved target beneficiaries and others that have been affected in assessing what has happened to their lives.

But the pace of change and the ways in which people are using ICTs mean that assessing individual projects is very far from assessing the impact of ICTs. The biggest impacts ICTs are having on development arise from the ways in which people use them on their own initiative rather than from programmes and projects designed by governments and other stakeholders. We need to assess the impact of ICTs in general even more than we need to assess their impact in programmes and in projects.

And the impact of that US election?

So why did I decide not to focus this week’s post on the impact of the US presidential election? Because, of course, it’s too early to tell. Though I may speculate in future weeks, when the dust has settled just a bit and that new president’s in office.

Next week’s blog will look at the Internet and jurisdiction, reporting from an international conference to be held in Paris.

David Souter is a longstanding associate of

David Souter is a longstanding associate of